Abstract

In recent years, depth cameras have become a widely available sensor type that captures

depth images at real-time frame rates. Even though recent approaches have shown

that 3D pose estimation from monocular 2.5D depth images has become feasible, there

are still challenging problems due to strong noise in the depth data and self-occlusions

in the motions being captured. In this paper, we present an efficient and robust

pose estimation framework for tracking full-body motions from a single depth image

stream. Following a data-driven hybrid strategy that combines local optimization

with global retrieval techniques, we contribute several technical improvements that

lead to speed-ups of an order of magnitude compared to previous approaches. In particular,

we introduce a variant of Dijkstra’s algorithm to efficiently extract pose features

from the depth data and describe a novel late-fusion scheme based on an efficiently

computable sparse Hausdorff distance to combine local and global pose estimates.

Our experiments show that the combination of these techniques facilitates real-time

tracking with stable results even for fast and complex motions, making it applicable

to a wide range of interactive scenarios.

|

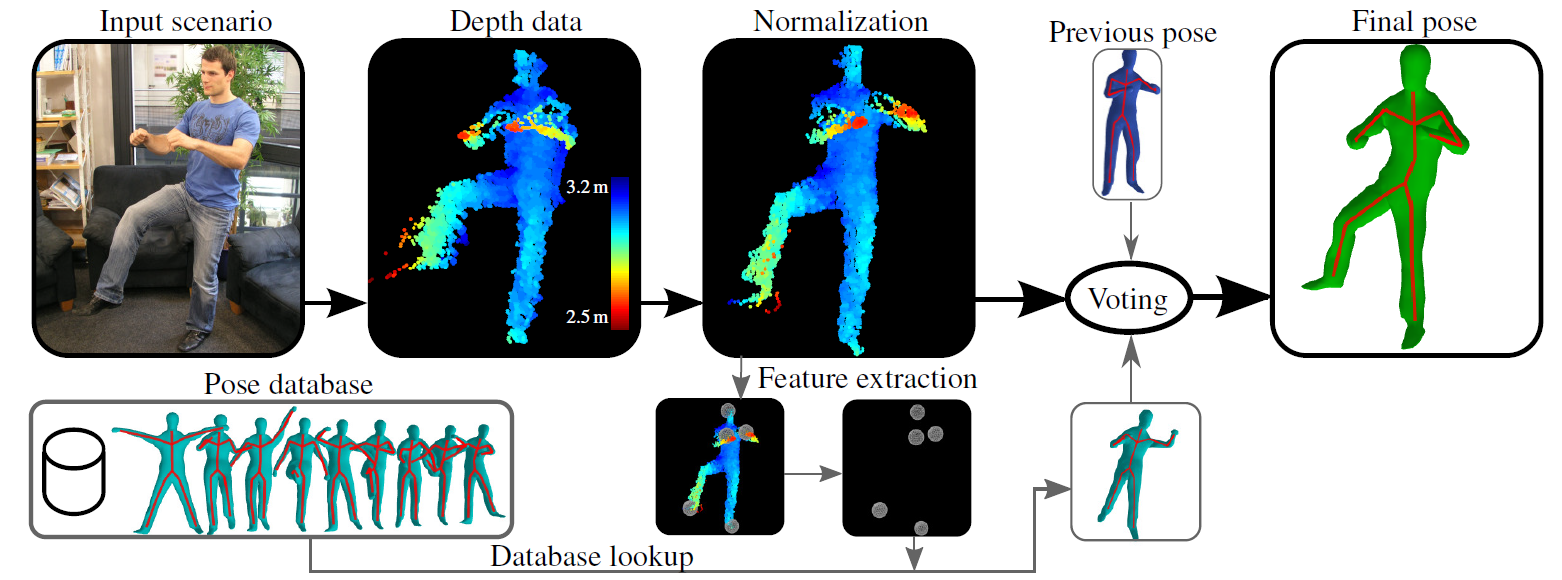

Overview of our proposed pose estimation framework.

Overview of our proposed pose estimation framework.